A few days back, I got an

opportunity to present at vForum 2013 in Mumbai, the Financial Capital of

India. With more than 3000 participants across 2 days of this mega event, it

was definitely one of the biggest customer events in India. I along with my team was re-presenting the VMware Professional

Services at vForum and I was given the opportunity present on the following topic:-

"Architecting

vSphere Environments - Everything you wanted to know!"

When we finalized the topic, I

realized that the presenting this topic in 45 minutes is next to

impossible. With the amount of complexity which goes into Architecting a

vSphere Environment, one could actually write an entire book. However, the task on

hand was to narrate the same in form of a presentation.

As I started planning the slides, I

decided to look at the architectural decisions, which in my experience are

the "Most Important One's". These are important decisions as they can make

or break the Virtual Infrastructure. The other filtering criterion was to

ensure that I talk about the GREY AREAS where I always

see uncertainty. This uncertainty can transform a Good Design into a Bad design. At the end I was able to come out with a final presentation which

was received very well by the attendees. I thought of sharing the content with the entire community through this blog series and this being the Part 1, where I will give you some key design considerations for designing vSphere Clusters.

Before I begin, I would also want to give the credit

to a number of VMware experts in the community. Their books, blogs and the

discussions which I have had with them in the past, helped me in creating this

content. This includes books & blogs by Duncan, Frank, Forbes Guthrie, Scott

Lowe, Cormac Hogan &

some fantastic discussions with Michael

Webster earlier this year.

Here is a small Graphical Disclaimer:-

Here are my thoughts on creating vSphere Clusters!!

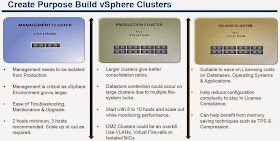

The message behind the

slide above is to create vSphere Clusters based on the purpose they need to

fulfill in the IT landscape of your organization.

Management

Cluster

The management cluster

refers here to a 2 to 3 host ESXi host which is used by the IT team to

primarily host all the workloads which are used to build up a vSphere

Infrastructure. This includes VMs such as vCenter Server, Database Server,

vCOps, SRM, vSphere Replication Appliance, VMA Appliance, Chargeback Manager

etc. This cluster can also host other infrastructure components such as Active

Directory, Backup Servers, Anti-virus etc. This approach has multiple benefits such

as:-

- Security due to isolation of management workloads from production workloads. This gives a complete control to the IT team on the workloads which are critical to manage the environment.

- Ease of upgrading the vSphere Environment and related components without impacting the production workloads.

- Ease of troubleshooting issues within these components since the resources such as compute, storage and network are isolated and dedicated for this cluster.

A quick tip would be to ensure that this cluster is minimum a

2 node cluster for vSphere HA to protect workloads in case one host goes down.

A three(3) node management cluster would be ideal since you would have the

option of running maintenance tasks on ESXi servers without having to disable

HA. You might want to consider using VSAN for this infrastructure as this

is the primary use case which both Rawlinson & Cormac suggest. Remember, VSAN

is in beta right now, so make your choices accordingly.

Production

Clusters

As the name suggests

this cluster would host all your production workloads. This cluster is the heart

of your organization as this hosts the business applications, databases, web

services, literally this is what gives you the job of being a VMware architect

or a Virtualization Admin. J

Here are a few

pointers which you need to keep in mind while creating Production Clusters:-

- The number of ESXi hosts in a cluster will impact you consolidation ratios in most of the cases. As a rule of thumb, you will always consider one ESXi host in a 4 node cluster for HA failover (assuming), but you could also do the same on a 8 node cluster, which ideally saves 1 ESXi host for you for running additional workloads. Yes, the HA calculations matter and they can be either on the basis of slot size or percentage of resources.

- Always consider at least 1 host as a failover limit per 8 to 10 ESXi servers. So in a 16 node cluster, do not stick with only 1 host for failover, look for at least taking this number to 2. This is to ensure that you cover the risk as much as possible by providing additional node for failover scenarios

- Setting up large clusters comes with their benefits such as higher consolidation ratios etc., they might have a downside as well if you do not have the enterprise class or rightly sized storage in your infrastructure. Remember, if a Datastore is presented to a 16 Node or a 32 Node cluster, and on top of that, if the VMs on that datastore are spread across the cluster, chances that you might get into contention for SCSI locking. If you are using VAAI this will be reduced by ATS, however try to start with small and grow gradually to see if your storage behavior is not being impacted.

· Having separate ESXI servers for DMZ workloads is OLD SCHOOL. This

was done to create physical boundaries between servers. This practice is a true

burden which is carried over from physical world to virtual. It’s time to shed

that load and make use of mature technologies such as VLANs to create logical

isolation zones between internal and external networks. In worst case, you

might want to use separate network cards and physical network fabric but you

can still run on the same ESXi server which gives you better consolidation ratios

and ensures the level of security which is required in an enterprise.

Island

Clusters

Yes they sound fancy but the concept of Island clusters

as laid down in my slides is to run islands of ESXi servers (small groups)

which can host workloads which have special license requirements. Although I do

not appreciate how some vendors try to apply illogical licensing policies on

their applications, middle-ware and databases, this is a great way of avoiding

all the hustle and bustle which is created by sales folks. Some of the examples

for Island Clusters would include

· Running Oracle Databases/Middleware/Applications on their

dedicated clusters. This will not only ensure that you are able to consolidate

more and more on a small cluster of ESXi hosts and save money but also ensure

that you ZIP the mouth of your friendly sales guy by being in what they think

is License Compliance.

· I have customers who have used island clusters of operating

systems such as Windows. This also helps you save on those datacenter,

enterprise or standard editions of Windows OS.

· Another important benefit of this approach is that it helps ESXi

use the memory management technique of Transparent Page Sharing (TPS) more

efficiently since with this approach there are chances that you are running a

lot of duplicate pages spawned by these VMs in the physical memory of your ESXi

servers. I have seen this going up-to 30 percent and this can be fetched in a

vCenter Operations Manager report if you have that installed in your Virtual

Infrastructure.

With this I would close this article. I was

hoping to give you a quick scoop in all these parts, but this article is now

four pages J. I hope this helps you

make the right choices for your virtual infrastructure when it comes to vSphere

Clusters.

Stay tuned for the other parts in the near

future…

As always – Share

& Spread the Knowledge!!

Very nice and comprehensive article!!

ReplyDeleteThanks.. Glad you liked it!! Stay tuned for more stuff..

ReplyDeleteHi Sunny,

ReplyDeleteThanks for sharing this great article. I have a question regarding workload types in virtual clusters.

With the onset of cloud (and all the hype that is associate with it) do you think that this will impact the assignment of workload types to virtual clusters. For example Production/Test/Dev vmware dedicated clusters.

To give you an idea of where I'm coming from, In the organisation I work for (as VMware admin) we have a vision from IT management of Cloud first - Virtualization second and physical by exception. Our Cloud and Virtual infrastructure run on separate hardware.

We have all the cluster types that you have described (Mgmt, Production/Test/Dev and Island or Application specific clusters). However in a cloud type setup a user will not know if the other vm's on the same ESX host are Production/Test/Dev type workloads.

Do you think there is still an argument for separating Production/Dev/Test workloads in virtual clusters?

I am in the process of re-designing my vmware environment to drive higher utilisation of the hardware.

One of the proposals I have presented is to merge all workload types and manage clusters only by hardware type, monitoring for utilisation & contention. I would be interested in your view on this proposal.

Superfly,

ReplyDeleteI completely understand where you are coming from. However, I would add that it is important that we have a clear segregation of a Public Cloud and a Private Cloud which would then let us define the landscape of the workloads which one would want to run in the Cloud. I like the way you have a policy for Cloud First and Virtualization second, however in essence the Cloud without Virtualization is not possible. So the first stage is Virtualization and then with the layer of Self-Service, Automation, Chargeback and Virtual Machine Lifecycle makes it a comprehensive cloud solution, which can be either on premise or off-premise.

I would assume that you have both the Virtual and Cloud resources inside your datacenter. While I believe that we should look at a common pool of resources in a cloud infrastructure, you can still have a cloud environment where in you can have separate provider vDCs coming from each cluster of resources. So you would have a separate provider vDC for each type of workload, eg. Database, Applications etc.

Sunny. Yes, we have both Virtual and Cloud resources inside our datacenter. A small number of our infrastructure consumers are going to a public cloud provider to bypass (time consuming) internal processes of deploying vm(s). It has been deemed too difficult to address delays in these deployment processes. Therefore, management has decided that we can setup private cloud as an alternative to managed VMs. The owners are to take over supporting their own vm's. The view is eventually all vm's will be managed in this fashion and thus the cloud first vision statement. I've been asked to be more cloud like in the management of our vmware infrastructure. Therefore, do workload definitions still apply if we are trying to replicate a public cloud internally.

ReplyDeleteThanks for your reply.

Awesome article as always ! You always provide the extra dimension to admin team which is always a great help ! Keep it coming buddy ,,.

ReplyDeleteSuperfly - You might not want to replicate the Public Cloud within an organization as the dynamics of a public cloud are completely different. The biggest difference is how OEMs license their products for Service/Cloud Providers/Hosting companies as compared to enterprise. Here the Public clouds have an edge because they have volumes which a private cloud might not have at all. I would still go for Island clusters and create Provider Virtual Datacenters from them and tie them to specific templates such as database, middle ware etc which can help me save my license costs but at the same time automate things for me...

ReplyDelete@Santhosh - Thanks for the kind words.. Glad you liked the article..